Microsoft’s AI Release, Copilot, Has Security Implications

- 20 February 2024

- Posted by:

- Category: Blogs

In the realm of science fiction, artificial intelligence (AI) has long been a staple. From chess-playing machines to self-driving cars, AI has been imagined in countless forms. Today, these visions are becoming a reality, thanks to advancements in machine learning and deep learning.

What is Machine Learning?

Machine learning is a process used to teach a computer to learn using examples. The computer is exposed to lots of examples (data), told what they are looking at (labelling), and then it figures out the patterns on its own.

What is Deep Learning?

Deep learning is a specific type of machine learning that’s inspired by how our brains work. Instead of just one layer of learning, like in traditional machine learning, deep learning uses many layers to learn increasingly complex features from the data.

The Power of Copilot

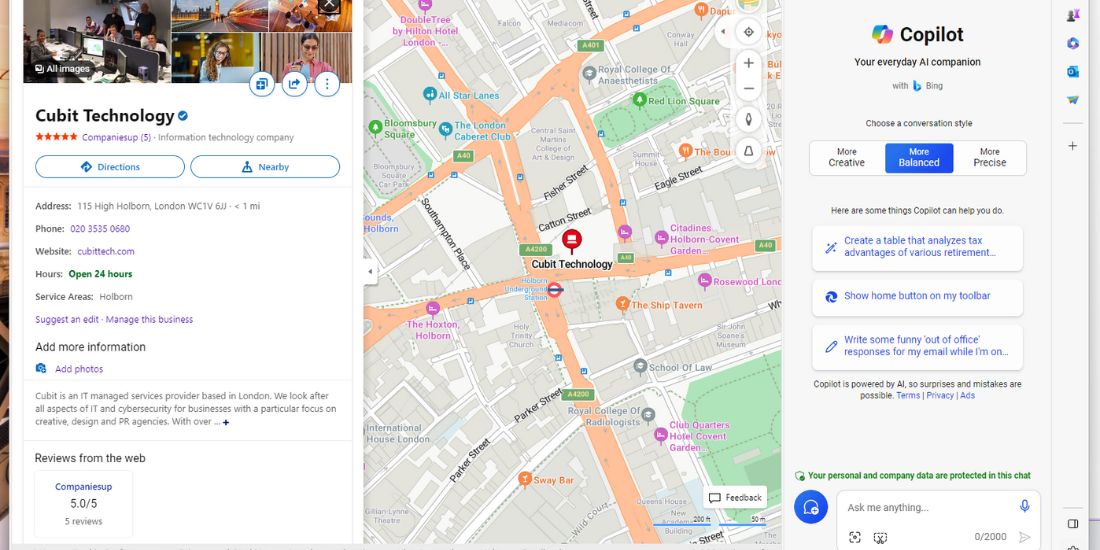

Microsoft’s new AI release, Copilot, is a testament to this progress. It is an AI assistant that lives inside each of your Microsoft 365 apps — Word, Excel, PowerPoint, Teams and Outlook. It’s designed to perform specific tasks that aid in problem-solving and enhance productivity. We’ve used it and found it very helpful!

What sets Copilot apart from other AI tools is its ability to access everything you’ve ever worked on in Microsoft 365. It gathers and compiles data from across your documents, presentations, emails, calendars, notes, and contacts. This feature allows the apps to understand the context of what is being written and provide relevant documentation and best practices.

Security Concerns

However, with great power comes great responsibility. The ability of Copilot to access all the sensitive data that a user can access presents significant security concerns. Approximately 10% of a company’s Microsoft 365 data is open to all employees. This means that Copilot can potentially access a vast amount of that sensitive information.

Moreover, Copilot can rapidly generate new sensitive data due to the access that it is granted – this data must be protected. For example, conversations recorded in a meeting are then transcribed and summarised by Copilot could be harmful to an organisation if mismanaged. This raises questions about data privacy and security, especially in a world where data breaches and cyber threats are increasingly common.

Addressing the Security Concerns

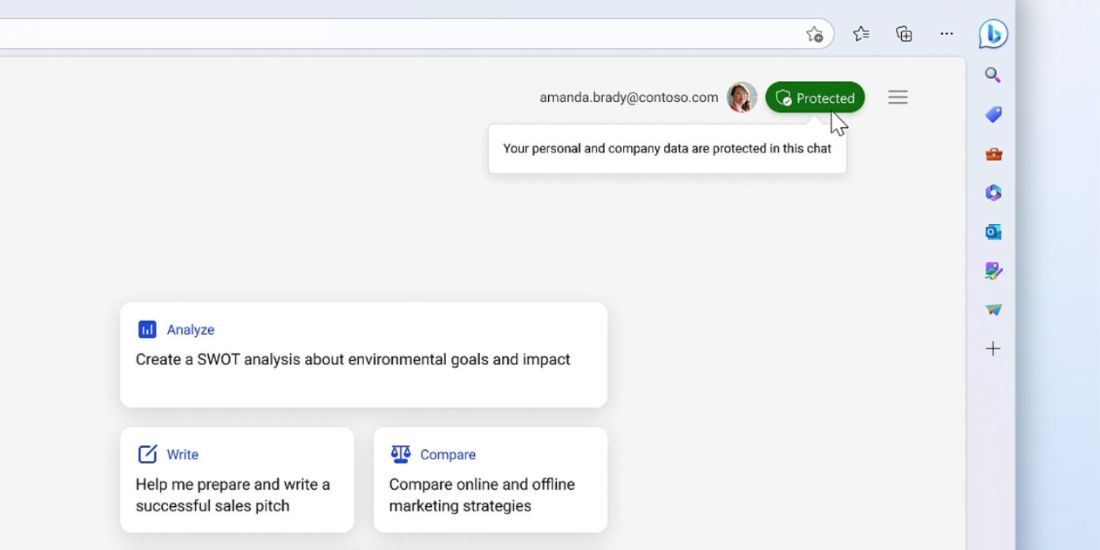

To address these concerns, Microsoft claims to have “ established a robust infrastructure to protect data processed by Microsoft Copilot”, and states that data is anonymised, and aggregated to prevent the identification of individual users or organisations. This strict adherence to privacy standards means, that if Microsoft’s claims are to be believed, Copilot’s AI capabilities can be used without compromising privacy.

Microsoft promotes transparency and control regarding data collection by Copilot. Users can view the collected data and the reasons for its collection. They have the option to adjust their data-sharing preferences according to their comfort level. This transparent approach ensures users can fully control their data while benefiting from Copilot’s AI-assisted coding experience.

Conclusion

Microsoft Copilot is a significant advancement in AI coding, but companies must address the security implications it presents. By implementing strong security measures and promoting transparency, companies can leverage the power of AI while ensuring the protection of sensitive data. With the advent of tools like Microsoft Copilot, we are witnessing a new era of AI, where machine learning and deep learning are not just buzzwords but integral components of our daily work.

What are your thoughts on Copilot and AI in general? It’s an interesting area of tech with a lot of potential for generating business growth. Read our recent blog on Copilot.

We actively support a range of agencies with Microsoft 365. Reach out to Ralph today to discuss whether we can introduce IT managed services to improve your business.